What should newsrooms do with AI?

As OpenAI and Google explore news partnerships, risks are everywhere

I.

Recently some gamers on a World of Warcraft forum noticed that a website called Z League is publishing articles that appear to be based on popular Reddit threads about the game. While the articles carry human-sounding bylines, the site carries no contact information for them, and the authors do not seem to have LinkedIn profiles.

Moreover, the Redditors observed, their articles bear all the hallmarks of artificial intelligence-written copy: bland writing laden with cliches; a heavy reliance on bullet points; and a structure that more closely resembles a book report than a traditional news article.

Most of the word count in these pieces is taken up by comments from Redditors, presumably scraped directly from the site, and linked together with bare-bones transitions.

“Reddit user OhhhYaaa shared the news about the ban, and many Counter-Strike players were quick to express their outrage and disappointment,” reads one passage in a piece headlined “Counter-Strike Players Can No Longer Wear Crocs During ESL Pro Tour Events.” “User RATTRAP666 simply commented, ‘jL in shambles,’ reflecting the sentiment of many players who feel that the new rule is unnecessary and unfair.”

Annoyed that Z League was repackaging their comments in this way — and monetizing them on a page choked to the breaking point with disruptive advertising — the Redditors proposed a prank: posting enthusiastically about their anticipation of “Glorbo,” an entirely fictional (and never-described) new feature of WoW. If Z League’s AI were as dumb as the gamers suspected, surely Z League would post about Glorbo mania.

On Thursday, the Redditors’ dreams came true. (Hat tip to The Verge’s Makena Kelly for pointing this out.) “World of Warcraft (WoW) Players Excited for Glorbo’s Introduction,” the SEO-friendly headline declared. The bot’s selection of quotes from Reddit was, in its way, perfect:

Reddit user kaefer_kriegerin expresses their excitement, stating, “Honestly, this new feature makes me so happy! I just really want some major bot operated news websites to publish an article about this.” This sentiment is echoed by many other players in the comments, who eagerly anticipate the changes Glorbo will bring to the game.

Ever since CNET was caught using an AI to misreport dozens of personal finance stories, the media world has been bracing for the arrival of sites like this one: serving brain-dead forum scraps, stitched together by a know-nothing API, generated ad infinitum and machine-gunned into Google’s web crawler in an effort to leech away ad revenue from more reputable sites.

Content farms are nothing new, of course; long before there was ChatGPT, there were eHow and Outbrain and Taboola. What’s different this time around, though, is that more reputable publishers appear poised to get in on the act.

AI and journalism are about to collide in ways both public and private. And before they do — for the love of Glorbo — we ought to talk about how that should work.

II.

The previous wave of stories around the intersection of AI and the news business centered on copyright issues. Is it legal for companies like OpenAI and Google to train large language models using news stories they scrape from the web? Artists, writers, and filmmakers have already filed lawsuits arguing that it is not; lawsuits from publishers seem likely as well.

For that reason, it doesn’t feel like a coincidence that some AI makers have belatedly begun to attempt buying some goodwill. Last week, OpenAI signed a deal with the Associated Press that, among other things, lets the company train its language models on historical AP copy. And this week, OpenAI announced a $5 million grant to the American Journalism Project, which makes grants to local news publishers around the country.

Tellingly, the grant is paired with “up to $5 million” in credits for news organizations to experiment with its generative AI models. Just as Facebook’s investments in journalism were often designed to get publishers buying ads on the platform, OpenAI is seeking to make subscription-based AI tools a part of journalists’ workflows.

In this regard, though, OpenAI already has competition. On Wednesday, the New York Times reported that Google is developing a generative AI writing tool known as Genesis that it has demonstrated for the Times, the Washington Post, and others.

Here are Benjamin Mullin and Nico Grant:

The tool, known internally by the working title Genesis, can take in information — details of current events, for example — and generate news content, the people said, speaking on the condition of anonymity to discuss the product.

One of the three people familiar with the product said that Google believed it could serve as a kind of personal assistant for journalists, automating some tasks to free up time for others, and that the company saw it as responsible technology that could help steer the publishing industry away from the pitfalls of generative A.I.

As Joshua Benton points out at Nieman Lab, such a tool might do some good — augmenting journalists’ talents without replacing them. It could create a mediocre first draft, for example — typically the most labor-intensive part of writing — freeing up more time for reporting and editing.

I also expect that AI tools could be helpful in quickly drafting context and background. Many news stories report incremental developments in their first few paragraphs, and then fill in the rest with back story that doesn’t change much as the broader narrative unfolds. While writers will still want to avoid plagiarizing themselves, I can see value in an AI tool that quickly assembles the context for process-driven stories in which only the first few hundred words contain original information.

Still, I find it hard not to be cynical about these developments, particularly in Google’s case. Here you have a company that reshaped the web to its own benefit using Chrome, search and web standards; built a large generative AI model from that web using (in part) unlicensed journalism from news publishers; and now seeks to sell that intelligence back to those same publishers in the form of a new subscription product.

Courts will decide whether all that is legal. But if you value competition, digital media, or the web, none of these developments strike me as particularly positive.

III.

Like it or not, though, the tale of Glorbo signals that the age of AI news is upon us. The story to watch over the next six months to a year is which digital publishers embrace these cutting-edge spam techniques, which remain holdouts, and who winds up profiting in the end.

Already, a large handful of publishers have admitted to being, at the very least, AI-curious. Red Ventures, of course. G/O Media. BuzzFeed. Insider.

Generally speaking: The more articles that a news outlet was publishing before ChatGPT arrived, the more likely it is to spin up an AI content farm. In the quantity-over-quality business, you can win by publishing more than the other guy.

As a reader, of course, I’m on the side of the publications paying real people to make phone calls, chase leads, and write blog posts. I spend a good part of most days reading medium-effort writing about news, politics, tech and entertainment. The writers responsible for that work are currently supported by a fragile base of ads and (to a lesser degree) subscriptions.

But a giant AI wave is heading toward the shore. And whole categories of journalism that were once the province of entry-level and mid-career writers are about to be automated away, leaving their futures in question.

Already, or within a few months, generative AI models should be capable of creating the following kinds of stories, which today make up a significant portion of many news sites:

Posts about how to do things.

Posts about recipes.

Posts offering evergreen advice about health, nutrition, and fitness.

Posts about new TV and movie trailers, which mostly serve as placeholders for embedded YouTube videos.

Posts about what happened in the end credits of a movie.

Posts about services raising their prices.

Posts about how to watch events like the Super Bowl, Academy Awards, or Apple’s iPhone announcement online.

Posts about which movies and TV shows are coming to and leaving streaming services this month.

Posts about the “best” laptop, smartphone, and other goods, which can borrow their editorial judgment from review aggregators (and stuff the recommendations with affiliate links).

Meta-reviews of movies, TV shows, and gadgets that summarize reviews on Metacritic, Rotten Tomatoes, and other aggregators.

Roundups of the “best” apps for various tasks, also stuffed with affiliate links.

Posts about viral social media moments, such as two celebrities arguing via Instagram.

Slideshows of celebrity fashions, cute animals, and other viral photography.

Anniversary posts that round up key events on this day in previous years.

Daily crosswords or other puzzles.

To avoid the Glorbo problem, these stories would need human oversight. Over time, though, they’ll probably need less and less of it. And while little of this content, if any, would be good, for lots of people it might be good enough.

A digital media site publishing only AI-generated pablum wouldn’t win many prizes. But it might make money, at least for a while, even as it fuels a race to the bottom in ad prices that forces ever more working writers out of the business.

The optimistic gloss on all this, often parroted by AI-curious media executives, is that offloading posts like those above will free up their reporters to do higher-value work. Presumably, though, they could be doing that work already. The fact that so many have been tasked primarily with writing SEO bait suggests strongly that, in the eyes of their bosses, churning out chum is where their highest value lies.

IV.

To return to the question that headlines this column, then: What should newsrooms do with AI? The responsible thing is to go slowly: testing whether generative AI tools can improve the productivity of their reporters, while bringing intense skepticism to the idea that the products can ever be left unsupervised. They should disclose when and how they use large language models in public-facing work — and, at least for the time being, err on the side of not using them.

My fear, though, is that what I see as publishers’ moral imperative here is at odds with the financial one. The more that sites like Z League seem to succeed, the more that traditional publishers will be tempted to join them.

By the day’s end, a presumably human employee of Z League added the not-quite-accurate tag “satire” to its original post about Glorbo. In a healthy world, the story would serve as a cautionary tale.

In this one, though, we could be about to see Glorbos everywhere.

On the podcast this week: Anthropic CEO Dario Amodei joins us to talk about building the most anxious company in artificial intelligence. Plus, I force Kevin to watch the abominable Netflix show Deep Fake Love (aka Falso Amor).

Apple | Spotify | Stitcher | Amazon | Google

Governing

Apple threatened to pull FaceTime and iMessage access if the U.K. government follows through on new surveillance bills that would require tech companies undermine encryption. This feels like a serious threat to encryption — I’ll be watching it closely. (Zoe Kleinman / BBC)

The Justice Department and the Federal Trade Commission released a new set of U.S. merger guidelines designed to update longstanding approaches to how the government reviews potentially anticompetitive conduct. The draft changes specifically call out “platform companies” as an area in need of a revised approach to antitrust review. (Brian Fung / CNN)

OpenAI has been testing a version of ChatGPT that can analyze images, but the company has concerns this could lead to facial recognition use cases that violate privacy law. (Kashmir Hill / The New York Times)

TikTok failed a stress test conducted by European regulators to determine if the app complies with the new Digital Services Act. The company says it’s committed to complying by the August 25 deadline. (Alex Barinka / Bloomberg)

Microsoft and Activision Blizzard extended their merger agreement to October 18 to give Microsoft more time to negotiate new deal terms with U.K. regulators. (Tom Warren / The Verge)

Nicholas Burns, the U.S. ambassador to China, had his email account compromised by what security officials believe is a Chinese spying operation. (Dustin Volz and Warren P. Strobel / WSJ)

Researchers across organizations have detected a surge in hateful conduct on Twitter since the takeover by Elon Musk and subsequent changes to the company’s moderation policies. (Aisha Counts, and Eari Nakano / Bloomberg)

Instagram won a copyright case brought by two photographers over images embedded onto news websites after the court found that embeds didn’t constitute illegal copies. (Andy Maxwell / TorrentFreak)

The Biden administration added two spyware firms, Intellexa and Hungarian firm Cytrox, to the U.S. Commerce Department’s “Entity List” over concerns their spyware was being used to violate human rights. Good. (Tim Starks and David DiMolfetta / The Washington Post)

Meta’s relationship with news publishers has grown especially chilly in recent months as the company continues its feud with Canada over the country’s “link tax” and deprioritizes news content on Threads. (Hannah Murphy / Financial Times)

TikTok is the most popular news source for 12 to 15-year-olds in the U.K., followed by YouTube and Instagram, according to a new report from Ofcom. (Hibaq Farah / The Guardian)

Employees at Grindr filed a unionization petition and say they’ve signed up a majority of the around 100 eligible unit members in an effort to stave off layoffs and better protect LGBTQ workers from ongoing threats. (Josh Eidelson / Bloomberg)

Industry

Apple is working on its own generative AI chatbot, which some employees are calling “Apple GPT,” to compete with Google and OpenAI — but it hasn’t yet decided how to release such a product to consumers. Siri seems like the obvious answer. (Mark Gurman / Bloomberg)

AI companies are turning to synthetic data created by large language models to train newer generative models, now that large swaths of the human-made data on the web have already been used. Synthetic data is not only cheaper, but also sidesteps some copyright and privacy concerns. (Madhumita Murgia / Financial Times)

Meta said it would open source its Llama 2 AI software this week, but its commercial terms clarify that organizations with more than 700 million MAUs must obtain a license to use the tech. That puts LLaMA off limits to some of Meta’s biggest competitors. (Moneycontrol)

OpenAI released a new feature for ChatGPT subscribers that will let you inform the bot of information it should always know about to help cut down on having to issue repetitive instructions. (David Pierce / The Verge)

Stanford researchers released a new report showing deficiencies in GPT-4 outputs over time, fueling concerns that OpenAI’s chatbot has grown worse at coding and other computational tasks since release. OpenAI has denied the claims. I’m very interested in this mystery! (Benj Edwards / Ars Technica)

Hundreds of thousands of ChatGPT credentials are available for sale on the dark web, alongside a malware-focused alternative called WormGPT. Hackers are interested in weaponizing generative AI. (Ionut Ilascu / Bleeping Computer)

New photo editing app Remini is going viral for an AI-powered headshot feature, but users are voicing concern about changes the software makes to women’s bodies. The app is reported to make women appear skinnier. (Sarah Perez / TechCrunch)

Microsoft’s stock growth since 2014 when CEO Satya Nadella took over has been so significant that the chief executive’s payouts since taking the job have totaled more than $1 billion. (Pui Gwen Yeung, and Anders Melin / Bloomberg)

Meta has scaled back its once-ambitious augmented reality hardware plans as it’s struggled to incorporate technology from subsidiaries and partners to create AR glasses that are both affordable and powerful. (Wayne Ma / The Information)

WhatsApp launched a standalone WearOS app for smartwatches that allows users to send and receive text and voice messages. (Jagmeet Singh / TechCrunch)

The collection of cybersecurity professionals that make up “Infosec Twitter” has dwindled considerably over the past few months, leading data scientist Jay Jacobs to predict the imminent death of the community. (Jay Jacobs / Cyentia Institute)

Twitter is working on a new feature that will let verified organizations post job listings directly to the platform in a bid to compete with LinkedIn. Now’s your chance to hire Catturd2. (Kristi Hines / Search Engine Journal)

TikTok’s newest viral trend is so-called NPC streaming, in which creators live stream themselves performing rote, robotic responses to emotes in exchange for tips. “Ice cream so good” has become an NPC streaming meme. Yum yum. (Gene Park / The Washington Post)

Netflix is expanding its password-sharing restrictions to almost all of its remaining markets after successful tests in the U.S. and Canada contributed to more than 6 million new signups last quarter. (Manish Singh / TechCrunch)

Roblox announced that developers will soon be able to offer subscriptions within games and experiences on the platform in order to “establish a recurring economic relationship with their users.” (Jay Peters / The Verge)

Google will begin enabling its Privacy Sandbox toolkit for Chrome developers, marking an important step in its plan to phase out third-party cookies by Q3 2024. (Jess Weatherbed / The Verge)

Google said it fixed a zero-day exploit in Chrome that was originally discovered by an Apple employee who reportedly failed to disclose it. Another Apple employee ultimately reported the bug to Google. (Lorenzo Franceschi-Bicchierai / TechCrunch)

Google raised the price of its YouTube Premium subscription by $2 to $13.99 per month in the U.S. for both new and existing customers. It also raised the price of its YouTube Music subscription by $1 per month. (Abner Li / 9to5Google)

Instagram announced updated templates for Reels that mean creators can now borrow even more elements of a popular post when making their own. It may lead to more repetitive Reels on the platform. (Mia Sato / The Verge)

Telegram raised $210 million in bond sales this week in order to help it reach a “break-even” point after years of unprofitability. (Manish Singh / TechCrunch)

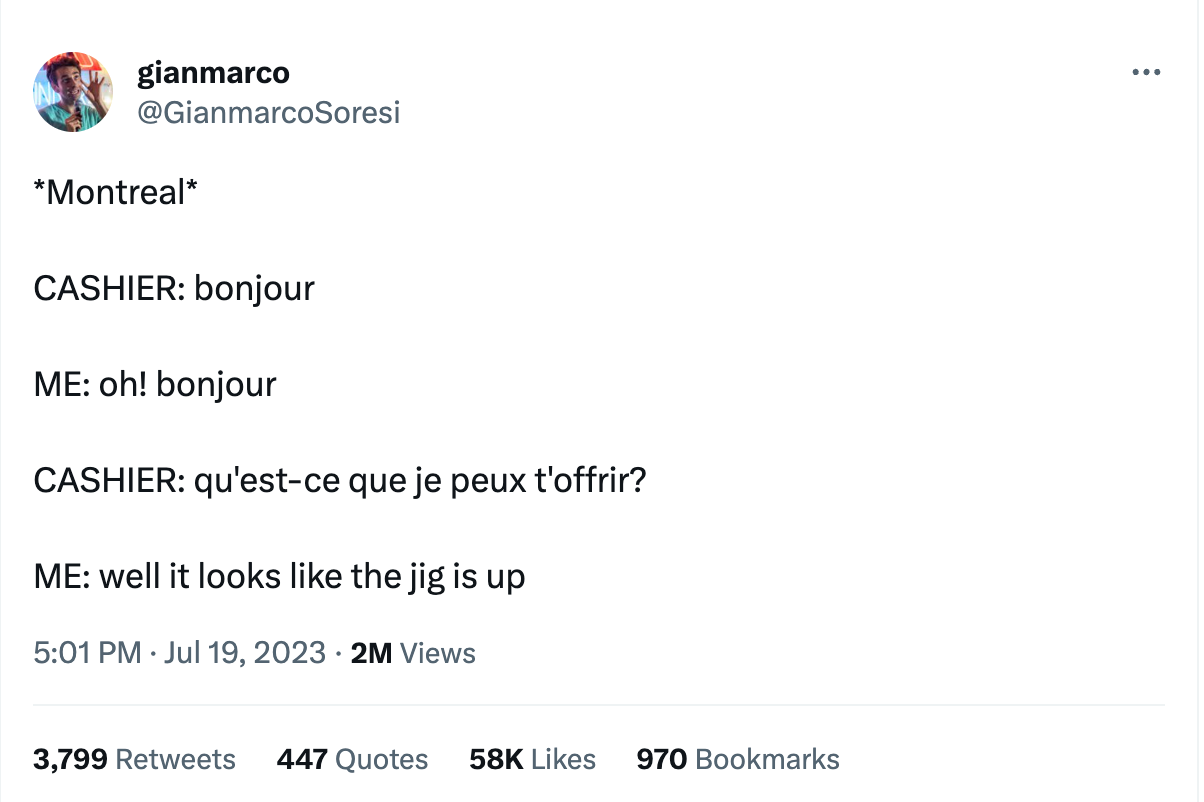

Those good tweets

For more good tweets every day, follow Casey’s Instagram stories.

(Link)

(Link)

(Link)

Talk to us

Send us tips, comments, questions, and sustainable news ecosystems: casey@platformer.news and zoe@platformer.news.

Glory to Glorbo, means glory to me.

Infosec Twitter is now very much Infosec Mastodon, largely on the infosec.exchange server and others.